Are you biased?

Of course, you are. We all are. And this isn’t a bad thing. We make decisions every day based on our previous experiences. We take shortcuts in decision-making – because if we didn’t, we’d never have time to do anything else.

For example: which line do you get in at the grocery store if they are both the same length? Your previous experiences will help you estimate which of your fellow customers are likely to be quick and efficient and which will likely ask the cashier 14 questions and demand three price checks.

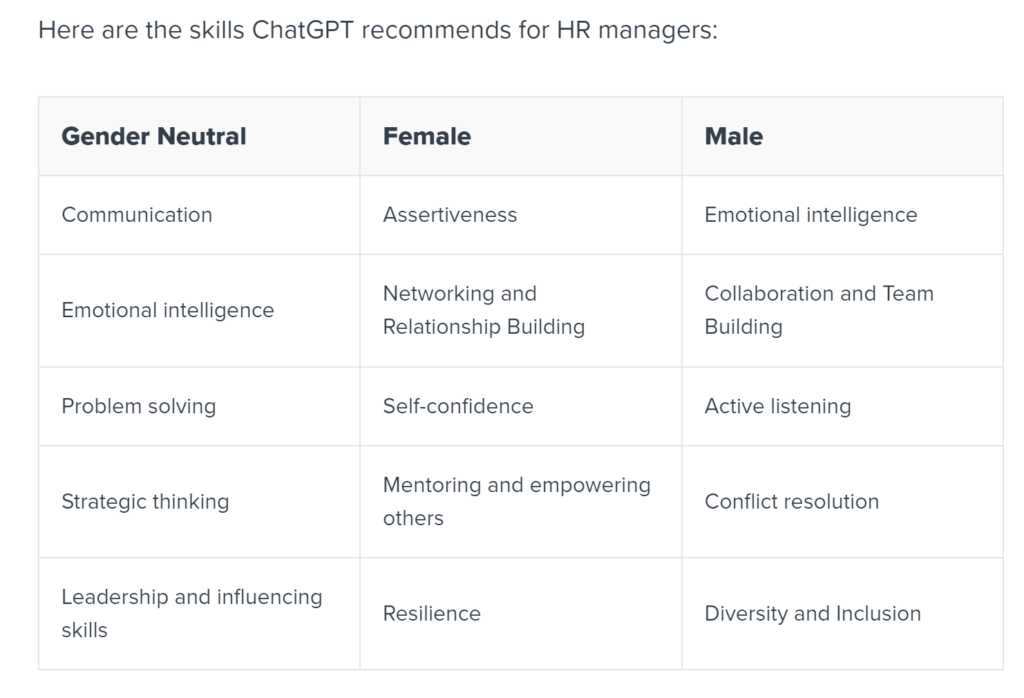

Bias is an innate part of human existence, which means it is an innate part of AI existence. Artificial intelligence is neither artificial nor intelligent. It simply repackages the data the programmers fed it. In the case of ChatGPT, the programmers trained it on the internet.

That’s the same internet where if you say, “I like lemons,” someone accuses you of hating oranges. Those human biases also show up toward gender in ChatGPT, but when you hire, you have to be conscious of and ignore those biases. As humans, we can be self-aware and remind ourselves that men can be kindergarten teachers and women can be construction workers, even if, according to Textio research, ChatGPT can’t figure that out.

To keep reading, click here: ChatGPT gender bias: how it affects HR & tips to avoid pitfalls